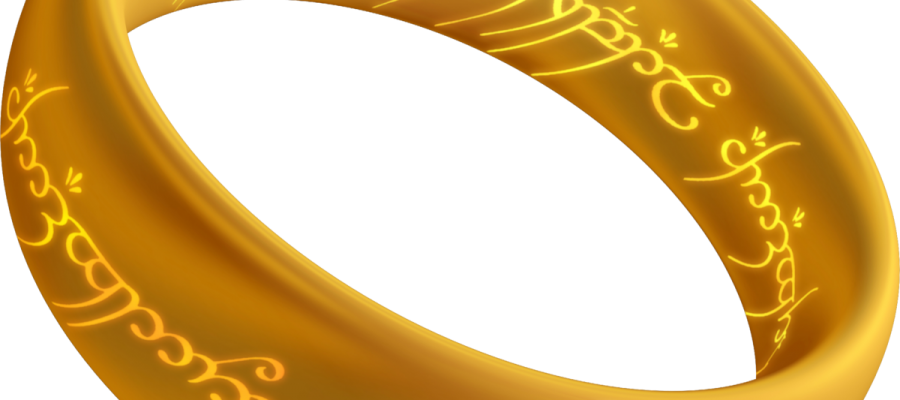

My company has been operating with a MySQL ring replication (also known as multi-master) topology for years now, and are about to do an architecture change. Here are the reasons why we chose it, some architectural considerations, and the benefits and drawbacks that I observed over the years.

Why We Chose It

The main drivers for master-master distributed architecture were 1) disaster recovery (DR) and 2) load balancing, both while maximizing utilization of computing resources.

The system that runs on this database is a high-density outbound dialing platform, for appointment reminders and other healthcare-related messaging. Ten years ago when the system was designed, Primary Rate Interface (PRI) telephony boards were the most reliable and high quality to achieve this. On the downside, PRI boards are very expensive and require dedicated T1 circuits that also incur a high monthly cost. Having a ‘passive’ DR site with idle equipment and circuits was, in my opinion, an inefficient use of capital. Having two active datacenters, both taking advantage of their local equipment and circuits, would be ideal.

Architectural Considerations

MySQL ring replication means ‘eventual synchronization’. As such, care must be taken not to write to the same record in both datacenters simultaneously. The reason is there may be an update to it ‘in flight’ via the MySQL relay logs while the update is occurring.

Our strategy to avoid this situation was by ‘partitioning’ customer data logically in the database tables. Specifically, we assign half of our customers to each datacenter via a column on a database table – let’s call it our ‘datacenter ID’ on the ‘customer’ table. All operations pertaining to that customer (in our case, phone, text, and email messaging) were performed from that datacenter. Therefore, all reads and writes for for a given account are confined to one datacenter, minimizing the chance of conflict.

The Reality

When database write volume was low, we had no conflicts and this strategy worked beautifully. However as time went on and the activity on the system increased substantially, ‘cracks’ began to form.

As transaction volume increases, the bandwidth required to keep nodes in sync increases as well. Since our bandwidth was limited, increased activity resulted in increased replication lag time. Increased lag means a larger window of time in which a replication conflict can occur.

We do have tables shared across customers that are not protected by the partitioning strategy described above. As a result, we started seeing situations whereby the same record was updated by multiple masters at almost the same time. As replication lag time increased, occurrences of this type of problem did as well.

Benefits

We did achieve the DR and load-balancing goals we set out to achieve.

However with replication, every write transaction must execute on every node. The result is that load isn’t distributed among master nodes much as one would expect.

Drawbacks

Replication conflicts are likely unless you partition your data completely and with extreme care. Replication conflicts can be difficult to reconcile and are to be avoided at all costs. Re-synchronizing can mean backup and restore from master to slave, can result in downtime and even data loss.

Conclusion

We had numerous issues over the years that stemmed from replication conflicts. Ultimately we recovered from all of them, not without varying degrees of irritation from customers and/or our team doing the recovery.

In hindsight, I feel this architecture served its purpose. Given the opportunity to do it again, I would 1) be more meticulous about data partitioning between masters and 2) watch bandwidth carefully to keep replication delay to a minimum.

Taken together, I think these could have made for an architecture with minimal conflicts. If your data needs do not allow for such partitioning, or your bandwith is limited, I’d strongly suggest avoiding such a setup.